Community Tip - Your Friends List is a way to easily have access to the community members that you interact with the most! X

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to call values from a table and then do the minerr() calculation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How to call values from a table and then do the minerr() calculation?

Hi:

This is the reply I got from created by VladimirN. in Creo.

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

This is a built-in Mathcad function. Minerr(var1, var2, ...) - returns the values of var1, var2, ..., coming closest to satisfying a system of equations and constraints in a Solve Block. Returns a scalar if only one argument, otherwise returns a vector of answers. If Minerr cannot converge, it returns the results of the last iteration.

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

I have two questions:

1) As I read the minerr() instruction, var1, var2, .. should be either integer or complex number.

Can var1, var2 be vectors?

2) Is there any way to call var1, var2 from a separated table and then put them into minerr()?

Solved! Go to Solution.

- Labels:

-

Statistics_Analysis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

speed up the calculation for a larger set of parameters

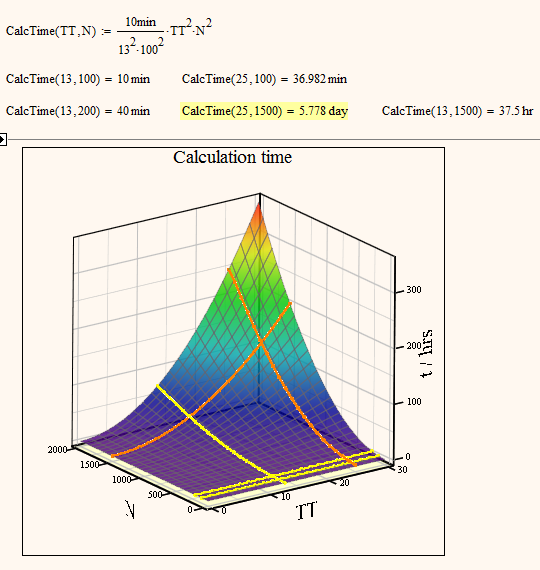

The time taken for non-linear least squares scales as the square of the number of residuals and as the square of the number of fitted parameters. Back in the days of mainframe computers that occupied an entire room and had 1/10000th of the processing power of my current smart phone, I ran some least squares optimizations that took two days to converge. So the length of time it takes to solve a "large" problem hasn't changed, just the definition of "large" has ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Richard Jackson wrote:

speed up the calculation for a larger set of parameters

The time taken for non-linear least squares scales as the square of the number of residuals and as the square of the number of fitted parameters.

As I understand it Chih-Yu Jen will have 25 to be fitted parameters und a residual vector of approx. 1500 elements (or 3000 if real and imaginary parts are stacked as per your suggestion). Every try with a set of different parameters TT will have to call a function make_Theory(TT) which returns a vector of 1500 complex numbers. The method to gain those values is rather complex and would involve creating and multiplying 25 2x2 matrices for every single value of that vector. Some methods how are in this post http://communities.ptc.com/message/211040#211040 and the following (which is more compact, maybe a bit faster and has two errors (m-->M, n-->TT)).

So I think that speeding up make_Theory(TT) would help in speeding up the overall calculation.

Back in the days of mainframe computers that occupied an entire room and had 1/10000th of the processing power of my current smart phone, I ran some least squares optimizations that took two days to converge.

You are showing your age ![]()

But two days would be fine, in a prior post in this thread, when Chih-Yu Jen sent some information about calc time with fewer parameters and smaller residual vector, I made an estimation that the calculation with the real values would take up three weeks or 5 and ahalf days with a minor improvement of mine. Not sure if that would have been true - think he didn't bother trying. Guess he will let us know how speedy we got in the meantime.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Werner:

I would like to thank you and Richard of helping me the coding.

Now I am trying to find constraints for a better approach.

I haven't tried the stack idea and still use the equation containing absolute value.

For 13 TTs 100 elements, each run takes 10-20mins.

Double the element will double the time.

However, double TTs take much long time.

In the next few days I will try 25 TTs 100 elements and then 25 TTs 1500 elements to check the calculation time.

I will let you know how many day(s)![]() it will take.

it will take.

In the future I believe I still have questions to ask.

In here I want to thank again your great help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For 13 TTs 100 elements, each run takes 10-20mins.

Double the element will double the time.

Time it. I think that actually it will take four times as long.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

13TTs/100 elements took me 20 mins and 25TTs/100 elements took me 1.5hr.

Close to your calculation.

I expect 25TTs/1500 elements will take me 1.5*15 hrs (I will try it soon).

I think I will need to figure out the code structure optimization soon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

In the next few days I will try 25 TTs 100 elements and then 25 TTs 1500 elements to check the calculation time.

13/100 take 10 to 20 minutes

According to Richard 13/200 should then take 15 to 30 minutes,

25/100 will take 35 to 70 minutes and

25/1500 approx. 2 1/2 to 5 hours.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Square, not square root. If 13/100 takes 10 to 20 minutes, I expect 13/200 to take 40 to 80 minutes. 25/1500 I expect to take 14 to 28 hours. That's less than two days though ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Richard Jackson wrote:

Square, not square root.

Oh yes, my fault. Was too quick in reading your second post about calc time.

If 13/100 takes 10 to 20 minutes, I expect 13/200 to take 40 to 80 minutes. 25/1500 I expect to take 14 to 28 hours. That's less than two days though

Hmm, something wrong with the following calculations? And those are the minimum values based on 13/100 -> 10 minutes.

Or, as an estimation: doubling the number (either TT or N) means calc time times four. 25 is approx 13 doubled and 1500 is approx 100 doubled four times. So in total we have numbers doubled five time. So we have to calculate 10 minutes times 4^5 which results in somewhat more than 7 days (that would be 26/1600).

and as of the other post - yes, I know that I'm showing my age. Not in the wheelchair, too, but maybe close 😉

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hmm, something wrong with my calculations here?

My mistake. I calculated the factor, but forgot to multiply by the 10 minutes. I did it on a calculator, so I guess it's a good example of why one should use Mathcad, not a pocket calculator! With the correct calculation I get 5.8 days. Ouch! Time to buy either a much faster computer, or a UPS!

Not in the wheelchair, too, but maybe close 😉

If the time comes, keep on rollin'. As long as the mind works, life has many rewards. Anyone that disagrees can go argue the point with Stephen Hawking ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Ouch! Time to buy either a much faster computer, or a UPS!

The latter would be handy in any case. A better algorithm, our in our case here, a better way to calculate vector Theory could be worth more than a machine four times as fast.

If the time comes, keep on rollin'. As long as the mind works, life has many rewards. Anyone that disagrees can go argue the point with Stephen Hawking

good point! ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You are showing your age

I think I will make the observation that computing power has advanced extremely rapidly. The PC was only invented just over 30 years ago, and at that time, and for many years afterwards, had such limited processing power that serious computing was still in the domain of mainframes. I'm not in a wheelchair quite yet ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm not in a wheelchair quite yet

Glad to hear that!

Students priority was rather low at university and I remember using punched cards and having to wait for the next day to get the printed output - unimaginable nowadays to wait a day for "Hello world!". And then the first time being privileged and allowed to use a terminal - what incredible comfort. Seems like things have changed a bit in the meantime.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Punched cards? You are showing your age ![]() . I started out with paper tapes, a huge advance over those primitive cards! I always had terminal access though (but it was a teleprinter to begin with, not a fancy CRT terminal), and only used the tapes for data transfer. I had to walk halfway across the university campus to drop them off at central computing, and I could access the data the next day. Now I can access data on the other side of the world in seconds, using a phone! Things have changed more than a bit, and in such a short time that even as a technophile it blows my mind!

. I started out with paper tapes, a huge advance over those primitive cards! I always had terminal access though (but it was a teleprinter to begin with, not a fancy CRT terminal), and only used the tapes for data transfer. I had to walk halfway across the university campus to drop them off at central computing, and I could access the data the next day. Now I can access data on the other side of the world in seconds, using a phone! Things have changed more than a bit, and in such a short time that even as a technophile it blows my mind!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

13TTs/100 elements took me 20 mins and 25TTs/100 elements took me 1.5hr.

25TTs/1500 elements should take 1.5*15hr (I will test it soon).

Close to yours calculation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

13TTs/100 elements took me 20 mins and 25TTs/100 elements took me 1.5hr.

25TTs/1500 elements should take 1.5*15hr (I will test it soon).

Close to yours calculation.

No, unfortunately its not linear but quadratic!

Look at http://communities.ptc.com/message/211198#211198 and change the 10min to 20 min.

According to that 25/1500 will take much more than a week!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

OK!!

The time I gave comes from my laptop simulation.

Hoepfully the office desktop can give a faster speed.

I will try the time by that one.

But anyway it's not an acceptable time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

But anyway it's not an acceptable time.

Yes, speeding up calculation of "Theory" would be mandatory if you really need 25 parameters and 1500 residuals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

25TTs/ 200 elements tooks me 3hrs (two time of 25TTs/ 100 elements).

25TTs/ 1500 elements tooks me about 2days.

And I think it also depends on the complication of the object.

I ran a simpler complication object and 25TTs/ 200 elements tooks me just 5-10mins.

I am not sure whether the reason is because it reaches the TOL/CTOL value.

The calculation time has no big difference when I setup TOL/CTOL from 1E-2 to 1E-5.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

25TTs/ 1500 elements tooks me about 2days.

And was the result satisfactory?

So the relationsship number of TT's and calc time seems not to be quadratic (fortunately), but not linear, too

And I think it also depends on the complication of the object.

Sure, thats the reason a simplification/speed up of the calculation, which is done in every step of the iteration (in your case the Make_Theory() routine) would greatly influence overall calculation time. In my demonstration template the calculation time was much smaller just because the single iteration step would take much less time as in your task.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

My original hoping is that minerr() can easily give a close answer without any additional setting.

Now it seems like constriants are required.

Maybe it is like the GPS. To find a shortest distance route, more clues are needed.

I have played with different built-in algorithms/options in MathCad but only LMA can give me a better approach.

However, not like other algorithms, LMA has no options to play.

Now I observe that the calculated TTs are close to setup constraints (I setup max/min values and relationship between TTs. EX: TT1<0.9*TT2, 1<TT3<2......).

Are they right ways to setup constraints?

Is there any parameter to determine how close will be between calculated TTs/constraints?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My original hoping is that minerr() can easily give a close answer without any additional setting.

Now it seems like constriants are required.

Or a slight rework of the model?

However, not like other algorithms, LMA has no options to play.

Yes, thats true. But thats inherent to this algorithm.

Now I observe that the calculated TTs are close to setup constraints (I setup max/min values and relationship between TTs. EX: TT1<0.9*TT2, 1<TT3<2......).

Are they right ways to setup constraints?

Sems so, if its that what you need/want. And Richard had shown a way how to weight those constraints, if that seems necessary.

Is there any parameter to determine how close will be between calculated TTs/constraints?

You mean something else besides ERR? Nothing I would know of. But you may setup your own function to compare the fits. You would have to determine how to weight a miss from your constraints.

Just being curious - did you compare the version V2 and V3 of make_Theory and was there a significant difference?

Afterthought: It seems to be from your reports that a change of TT values changes the vector Theory a lot and maybe thats the reason the solution is so close (too close?) to the guess values you start with. Or we have a very slow convergence and minErr stops toop soon as of a limit of iteration steps you mentioned. In tha latter case it could make sense giving minimize a try, I guess.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

What do you mean by "slight rework of the model"?

Are you talk about the code structure or writing the "miner() function" by myself?

About this part: I am not talking about ERR. I know minerr() is looking for TTs with min ERR and default TOL/CTOL=0.001.

"You mean something else besides ERR? Nothing I would know of. But you may setup your own function to compare the fits. You would have to determine how to weight a miss from your constraints."

My meaning is: Now my observation is that calculated TTs are close to my constraints.

Is there any way to know how far calculated TTs can be different from my constraints (my meaning is each calculated TT)?

For example: If I define TT1<0.9*TT2, what will be the ratio range of TT1/TT2? 0.7 to 0.9?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Think you made your reply before I edited my post abd added the question abot V2 vs V3 and the suggestion of using minimize().

ERR will take into consideration the additional constraints, too. Its not only the length of the difference vector of measure and Theory. You may see this if in my template "MinErrTest"you add T[1<T[2 as constraint (which does not make much sense and will end up in a much bader fit). But you can observe that the value of ERR now is different from the vector length calculated below. Another way to wrire that constraint is T[2-T[1>0. If you chose 1000*(T[2-T[1)>0 you weight this constraint stronger - watch the values of T's and ERR.

What I meant with looking at the model was looking at the rather complicated way you get from TT to Theory. The way you showed it so far requires taking values from the same sheet the measured data is coming from, so it looks its not pure theory - but maybe I'm wrong.

As you last questions. Not sure what you mean with "any way to know how far calculated TTs are different from constraint". When the iteration stops you see it anyway!? And what value would you expect if you demand TT1<0.9*TT2 and you get TT1=10 and TT2=9.1 and which if TT2=0.2?

TT1<0.9*TT2 would mean that the ratio TT1/TT2 is in the range from -infinity to 0.9.

If yount want the ration between 0.7 and 0.9 you would write 0.7*T2<T1<0.9*T2 or 0.7<T1/T2<0.9 if you are sure T2 is not getting the value 0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

Now I understand T[2=T(subscript 2). I was using the same constraint. You also remind me the way to make constraint stronger by using 1000*(T[2-T[1)>0. Thanks!!

The concept of my work requires to lookup TTs induced "n" (take empirical values) from the same sheet (.xls file). The meaning of the theory to me is those equations (make_theory(TT), make_ntheta, make_Msum). Maybe this difference confused you. But current code is what we want for now.

While using LMA, I found that minerr() try to find proper TTs in sequence (means TT0 first, then TT1, TT2....), not in parallel. It is because sometimes calculated TT0=1.1TT1 (overshoot), even I setup TT0<0.9*TT1.

It usually happens at beginning TTs (TT0/TT1/TT2) but not TT2-TT25.

That means to me is that if changing TT0 is enough to satisfy a min ERR, other TTs won't be adjusted as I expect. Maybe this is the concept of LMA( I am not sure).

I will try the stronger constraint and see how it works.

I understand constraints are just for reference, not exact rule.

Maybe that's the reason calculated TT0=1.1TT1 (overshoot) when I setup TT0<0.9*TT1.

My curiosity is: if I setup TT0<0.9*TT1 and TT1=1, what could be the possible TT0?

I know "TT1<0.9*TT2 would mean that the ratio TT1/TT2 is in the range from -infinity to 0.9."

To my experence here, TT1/TT2 always try to shoot values close to 0.9.

If I know the possible range (maybe setup in MathCad like CTOL), it's easier to setup constraints.

Do you know in what situations people setup constraints when they use minerr()?

My goal is to use minerr() without setting up constraints. Since I am dealing with multiple TTs and approaching just one solution, maybe I cannot avoid it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Constraints would be necessary if there are multiple possible "solutions" and may help to find the one which fits "wishes" one may have.

Don't think that LMA would just try to change one parameter after the other but rather would work with the gradient vector (numeric derivatives) to do its job. You can think of a 25-dimensional "surface" in your case. For further details you may google for "Levenberg-Marquardt".

If you don't want to use additional constraints I guess you will have to settle for what minerr will give you.

BTW - what are the results with minimize()? Here CTOL would have more effect, I guess.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

My expected solution should be a gradient.

I think minerr() with LMA is the best choose for now.

Look like minimize() doesn't have LMA option.

After using minimize(), with/without constriants cannot give a better solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

While using LMA, I found that minerr() try to find proper TTs in sequence (means TT0 first, then TT1, TT2....), not in parallel.

No, it finds them in parallel.

I understand constraints are just for reference, not exact rule.

Maybe that's the reason calculated TT0=1.1TT1 (overshoot) when I setup TT0<0.9*TT1.

My curiosity is: if I setup TT0<0.9*TT1 and TT1=1, what could be the possible TT0?

I know "TT1<0.9*TT2 would mean that the ratio TT1/TT2 is in the range from -infinity to 0.9."

In minerr a constraint is a soft constraint, not a hard constraint. If TT1<0.9*TT2 means that the ratio TT1/TT2 is in the range from -infinity to 0.9, that's a hard constraint, because the ratio cannot exceed 0.9. That's what you get with Find or minimize, but if you want a hard constraint in minerr you must weight the constraint very heavily.

If I know the possible range (maybe setup in MathCad like CTOL), it's easier to setup constraints.

There is no way to know the exact range. The errors in the constraints are minimized along will the errors in the residuals. In essence, everything is a constraint; the residuals are just constraints where certain differences should be equal to zero. So the possible magnitude of the error in a constraint depends on the possible magnitudes of the errors in all the other constraints, which includes the residuals. If you want a hard constraint, weight it heavily.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Richard:

Thanks for you and Werner's precious suggestions.

I will let you know the calculation time once I finish it.

- « Previous

- Next »