- Community

- ThingWorx

- ThingWorx Developers

- Re: Analytics Redundancy Filter

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Analytics Redundancy Filter

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Analytics Redundancy Filter

Hello community,

I'm trying to understand what it means to enable the redundancy filter when working with signals in Thingworx Analytics. The documentation reads as follows:

"The Redundancy Filter operates by calculating the mutual information for each feature with the goal variable. It then iteratively ranks the features, in combination with previously-selected features, according to the amount of information gain they provide. Features that provide more information gain are ranked higher. During training, this ranking is used to improve feature selection for the predictive model. The number of features indicated by Max Fields is selected from the top of the ranking."

I haven't found any more details and I don't think it's thoroughly explained, some statements seem a little ambiguous. Can someone shed a little more light on this functionality?

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Your understanding of the concept of redundancy filters is directionally correct. We are looking to penalize features that are providing redundant information, as in your example of two features (motor power and motor current) providing very similar information about a specific goal (for example, motor downtime in a future time period).

One thing to clarify is that we are not evaluating the correlation between the features but instead we are interested in “information gain”: how much new, non-redundant information each new feature provides. The redundancy filter functionality runs signals through an iterative process to identify "next" feature that provides the most "information gain" in relation to the goal. At each iteration we are choosing the feature that provides highest information gain given the features already selected.

In your example of the motor, we would first identify the feature with the highest information gain: motor power with an MI of 0.6 . Then we iterate through the next set of features to determine which feature has the most information gain (IG) where: information gain for a new feature = [information we will have about the goal from both motor power AND the new feature] - [information motor power already gave us about the goal]. Once we get the next feature with the most information gain, then we cycle through features again to find the next feature with the largest amount of information gain given the first two features have already been selected.

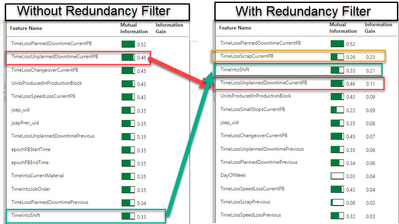

Below is example showing the results of signals without the redundancy filter and then with the redundancy filter. In this example, we are looking at finding which features related to operating conditions in a factory provide the most information about time losses in a future production block. The feature TimeLossPlannedDowntimeCurrentPB (Planned Downtime) provides the highest MI on its own. Without using redundancy filters, the next feature identified is TimeLossUnplannedDowntimeCurrentPB (Unplanned Downtime). When using redundancy filters, we see TimeLossUnplannedDowntimeCurrentPB (Unplanned Downtime) drop down in the list (but not out of the list) as it is providing redundant information that is already provided by the TimeLossPlannedDowntimeCurrentPB (Planned Downtime) feature. Instead, when using redundancy filters, the next feature that provides the most information gain is TimeLossScrapCurrentPB (Scrap), a feature that was much further down the list when just evaluating mutual information. Going further down the list with redundancy filter enabled, we see the list of features prioritized by information gain: how much more information it provides given we have already selected the features higher in the list.

Hopefully this provides more details on the capability and how this capability enables us to maximize “information gain”: how much new, non-redundant information each new feature provides.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you for posting your inquiry to the PTC Community.

I have requested one of our Analytics experts to review your request for clarification on Redundancy Filters.

From a basic level, Redundancy Filters: If the signals job is run with redundancy filtering turned on, the features are ranked according to the amount of Information Gain each feature, in combination with previously-selected features, provides.

Screenshots of how it works can be found here: https://support.ptc.com/help/thingworx/analytics/r9/en/#page/analytics/analytics_builder/signals_overview.html

If you desire additional explanation, please let me know.

Regards,

Neel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you for your answer @nsampat. After re-reading the documentation and a couple of wikipedia articles in-between I think I finally made sense of it. I'm gonna make a summary of what I understood, please correct me if I'm wrong.

As a simple explanation, given that some features can be highly correlated with each other (eg. motor power and motor current), if I want to train a model to predict another variable (eg. humidity of a bulk product), it does me no good to include both of these 2 correlated variables. Following the example, if I select the motor power as a good predictive feature, I gain no further information by adding the motor current and I risk adding unnecessary complexity to the model. It may be beneficial to consider another feature and give less importance to the motor current.

What the redundancy filter does is, aside from calculating the mutual information between each feature and the goal, it checks if the features are correlated with each other. If a pair of features is highly correlated, it picks the one that has the highest mutual information with the goal variable and gives it a higher rank that the other one. Circling back to the example, if the motor power has a mutual information score of 0.6 and the motor current 0.59 (with the goal variable), it'll assign a much higher rank to the motor power and lower the priority of the motor current, therefo re avoiding redundant features.

If I can make a suggestion about the documentation, considering that TWA is geared towards people without a data science background, some concepts could be explained more clearly. And on the opposite side, I've seen that is pretty common for technical software to at least mention the mathematical models/algorithms used, for futher understanding and validating.

Just a suggestion to make the understanding of these concepts easier.

Regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You have a very good understanding of Redundancy Filters.

There are some additional details that are specific to how ThingWorx Analytics considers information gain. Our SME will want to provide additional information to you via this thread, and they will have to organize their reply.

Please keep an eye on this thread in the next couple of days for their response with additional info.

Regards,

Neel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you Neel. The additional input would be great and very much appreciated. I'll keep an eye out.

Regards,

Rod

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Your understanding of the concept of redundancy filters is directionally correct. We are looking to penalize features that are providing redundant information, as in your example of two features (motor power and motor current) providing very similar information about a specific goal (for example, motor downtime in a future time period).

One thing to clarify is that we are not evaluating the correlation between the features but instead we are interested in “information gain”: how much new, non-redundant information each new feature provides. The redundancy filter functionality runs signals through an iterative process to identify "next" feature that provides the most "information gain" in relation to the goal. At each iteration we are choosing the feature that provides highest information gain given the features already selected.

In your example of the motor, we would first identify the feature with the highest information gain: motor power with an MI of 0.6 . Then we iterate through the next set of features to determine which feature has the most information gain (IG) where: information gain for a new feature = [information we will have about the goal from both motor power AND the new feature] - [information motor power already gave us about the goal]. Once we get the next feature with the most information gain, then we cycle through features again to find the next feature with the largest amount of information gain given the first two features have already been selected.

Below is example showing the results of signals without the redundancy filter and then with the redundancy filter. In this example, we are looking at finding which features related to operating conditions in a factory provide the most information about time losses in a future production block. The feature TimeLossPlannedDowntimeCurrentPB (Planned Downtime) provides the highest MI on its own. Without using redundancy filters, the next feature identified is TimeLossUnplannedDowntimeCurrentPB (Unplanned Downtime). When using redundancy filters, we see TimeLossUnplannedDowntimeCurrentPB (Unplanned Downtime) drop down in the list (but not out of the list) as it is providing redundant information that is already provided by the TimeLossPlannedDowntimeCurrentPB (Planned Downtime) feature. Instead, when using redundancy filters, the next feature that provides the most information gain is TimeLossScrapCurrentPB (Scrap), a feature that was much further down the list when just evaluating mutual information. Going further down the list with redundancy filter enabled, we see the list of features prioritized by information gain: how much more information it provides given we have already selected the features higher in the list.

Hopefully this provides more details on the capability and how this capability enables us to maximize “information gain”: how much new, non-redundant information each new feature provides.